Auditing is not a built-in feature and change tracking must be done manually, we all know working with PowerShell. The purpose of this article is to walk through some scenarios where an appropriate level of auditing is made with several techniques to handle this. Solutions provided will not be scenario specific but customized to fit the need. Auditing is a broad term for what will be shown in this article. Simply put, the script code below exports important tidbits of information to text files for later consumption.

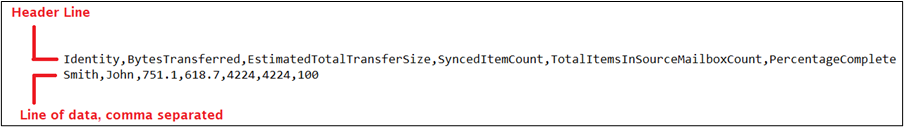

When creating these text files, we can use the Out-File cmdlet which allows the placing of information into files. We can build simple CSV files by providing a header line and then one line per CSV output using comma separated values. Below is a sample of a CSV:

Log File Creation

Before we walk through either scenario, let's create basic log files for tracking and auditing purposes. First, we need to establish a base for these reporting files. For this, we will use a variable ($BasePath) to store the current path. For logging files, a folder called 'Reports' will be used for the destination for any log files.

Before storing any files in this folder, we will check for the existence of the folder. In case it does not exist, we create it:

Additionally, we'll set the width of the PowerShell session window, as this makes logging long lines easier.

To help keep track of when events occurred, we will use a variable to store a one-liner to evoke as needed:

Lastly, we'll establish our log file names:

OK. On to the scenarios!

Scenario One

For our first scenario, we have a large company that is migrating from on-premises to Microsoft 365. Within the environment is a basic Active Directory configuration in terms of domains and forests - one and one - forest and domain levels are both set to Windows 2012 R2. In terms of size, we are looking at over 40,000 users in the environment and thousands of groups as well.

Prior to migration, it was decided to match their Primary SMTP address with their User Principal Name (UPN) to facilitate a good user experience. With some review, we found that 14,000+ accounts were not matching, and we would need to make changes. Through the grapevine, we had also heard that there were apps that may be restamping accounts with the incorrect information (read UPN changes). No apps were identified as relying on the UPN login, but we wanted to keep track of all changes just in case a reversal would be needed.

First, we need to enter a timestamp for the start of the script (note the date format is common for the US and international readers may need something different):

Out-File is the cmdlet that writes values to the log file we defined earlier.

Log Initial Values

For the first part of the log, we define our new log file and the header line of the file (think column headers in a CSV):

Then with each user, we take the properties values of the user's Display Name, User Alias, Primary SMTP Address and User Principal Name (UPN):

Notice that the line is appended to the file since we are adding each line to the file and do not want to overwrite the file. We can also be more comprehensive and log each user processed:

** Note that the variable used ($Output or $Line) is inconsequential as we can choose any acceptable PowerShell variable name if we wish.

Log Change and Failures

In this code, we have a section of code that deals with changing the user's UPN. The code is set up as a Try {} Catch {} block so that we can log good changes (in the Try {} block) or failures (Catch {} block).

In the middle, notice the $_.Exception.Message variable. This allows us to capture any PowerShell errors that normally would have been displayed and these are now copied to a log file for future examination.

Log Changed Values

This code will look exactly like the section we used to log initial settings, except our destination file will be different, since we need a before and after file:

End of the Run

Lastly, we will again add a timestamp for the end of the script.

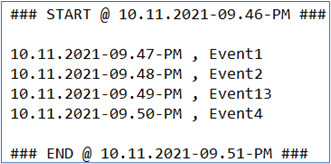

Below is a sample log file with a Start/End timestamp as well as a log of events in the middle:

On to the next scenario!

Scenario Two

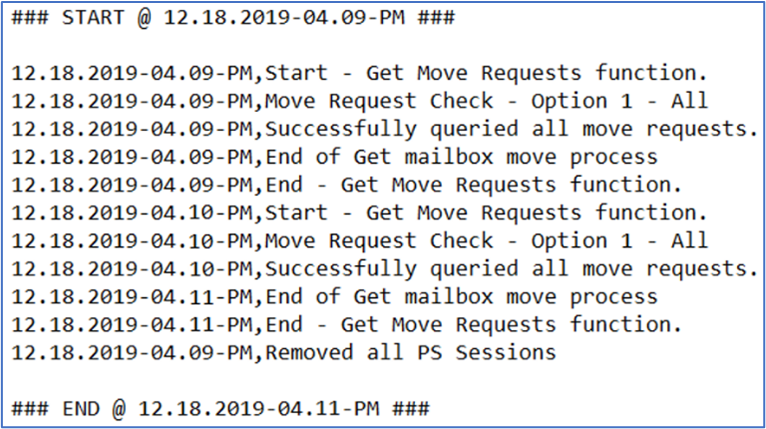

For this scenario, we have an Exchange-to-Exchange Online migration where we are moving over 10,000 mailboxes, from multiple geographical regions to a single Exchange Online tenant. While moving these users, we have a script (like the one previously written about here and here) that administrators are utilizing for migrations. To further enhance this script, we can use PowerShell to generate logs for critical items in the script.

This is the summary of logging we can perform:

- Write to a log every action that occurs, as well as a start and stop date for script execution.

- Log error - capture the error PowerShell would have generated to the screen.

Start of the script - appropriate header to the file to start the script:

Detailed Move Reports

For this function, the logging is just exporting the entire Report value, which provides a detailed analysis of a mailbox move to the cloud and is used to determine why the move has failed or is paused. Below, we grab the mailbox's primary smtp address, specify an output file (specific to the mailbox move we are querying), grab the mailbox's report and then export that entire report to the file we specified in line 2:

Log Mailbox Moves

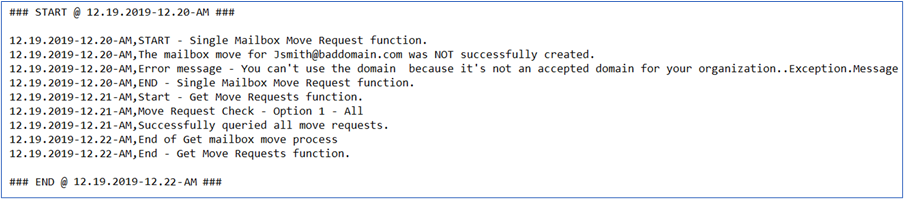

For this part of the script, we create a new move request for a mailbox migration, again using a Try {} Catch {} block. If the creation succeeds, then this is logged and if there is a failure, the error message is logged as well:

Remove Move Request, Last Example

In this code section, we have a code block that will remove move requests, perhaps because the user needs to wait or is being removed from the current set of moves due to them leaving the company. This process is similar to the new move request, shown previously, and we have this section of code:

Sample Log File Entries

Other Scenarios?

What scenarios can you come up with? Would you create separate logs for good and bad results? Another possible use of Out-File is documentation, for example, daily mailbox stats for all users in Exchange Online. In other words, we have many possibilities so go forth and log, or audit, or else with PowerShell.

Important Note

The number 10,000 is important in both scenarios. PowerShell uses a 1,000-result limit. Since both environments are large, using -ResultSize Unlimited for some cmdlets is the only way to get results. Another performance tip for large environments, if a cmdlet has 'Filter' parameters, use that before using 'Where' to filter your results. Using 'Where' in a large environment can significantly increase processing time for a script.

With ScriptRunner, you are always compliant and auditable

Governance and compliance readiness increasingly require the complete traceability of all processes. The use of PowerShell scripts is also not excluded. With the external database, you can prove which scripts were executed on which system with which parameters, what the results were, etc. over the required periods of time. No matter if you use one or more ScriptRunner hosts.

These logging techniques are essential, but are your audit logs themselves secure? Discover PowerShell security best practices that protect your audit trail from tampering.

Connection to SQL server with fault tolerance

ScriptRunner Report/Audit DB Connector connects the ScriptRunner host to the database on a Microsoft SQL server. If you run multiple ScriptRunner hosts, you can write them all to the same database. This means that you have all reporting information across all systems in a single database.If a report was generated by an action, it is first written to the circulation database on the ScriptRunner host. Connector also generates an XML file whose contents are automatically transferred to the database on the SQL server. A restart after errors ensures that no report is lost and all data that has occurred in the meantime is stored in the database.

Click here to read more

Related links

- ScriptRunner ActionPacks will help you automate tasks

- Try out ScriptRunner here

- ScriptRunner: Book a demo with our product experts

.svg)

.svg)